Data Warehouse Migration from SSIS to Microsoft Fabric

March 25, 2025

In this project we migrate onpremises data to microsoft fabric. also migrate traditional ssis packages to microsoft fabic.

Description

-

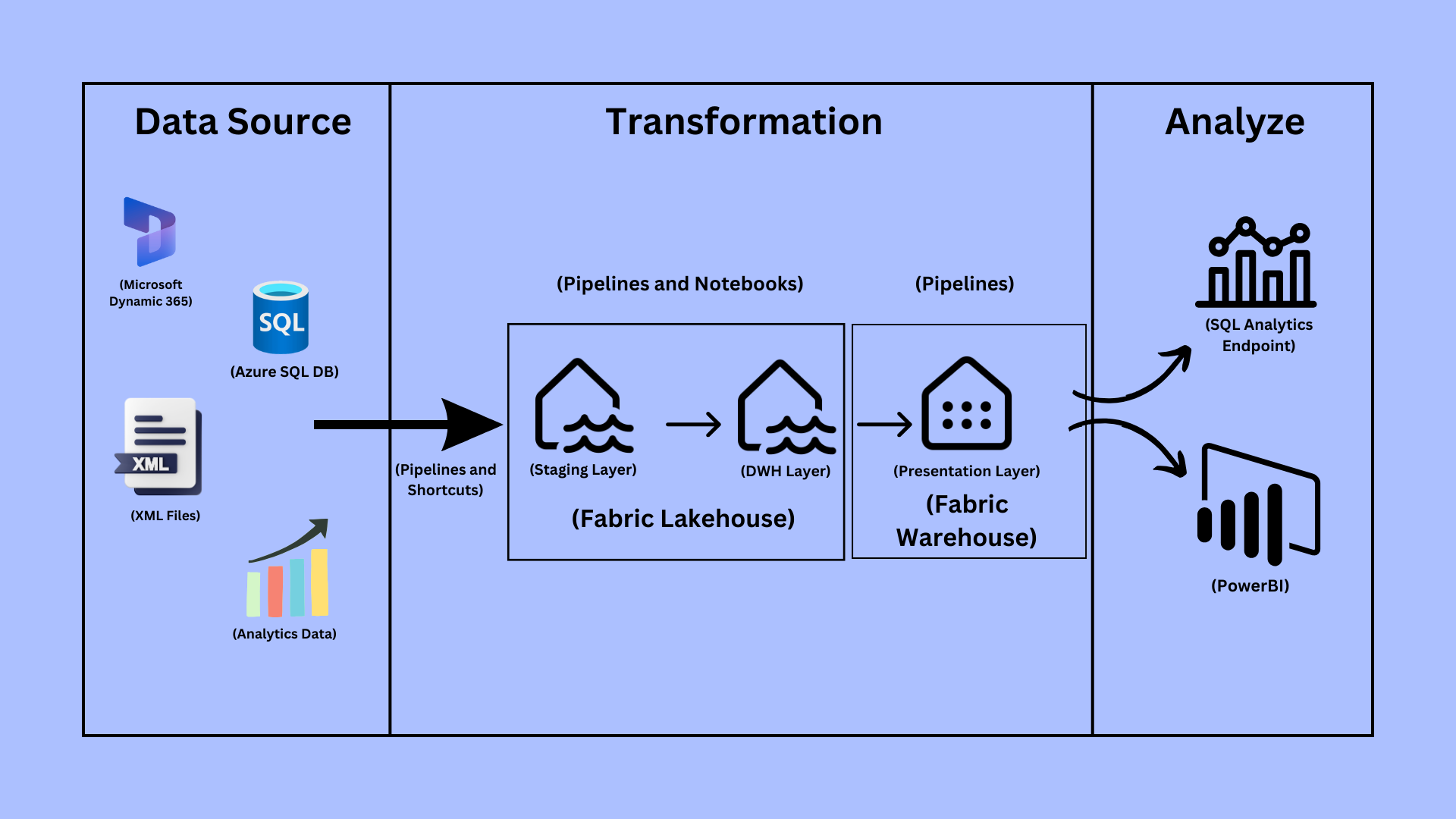

This project involved the migration of a data warehouse infrastructure from SQL Server Integration Services (SSIS) to Microsoft Fabric, a modernized platform for scalable data processing and analytics. The goal was to leverage Microsoft Fabric's capabilities to create a more efficient, scalable, and cloud-based data warehouse architecture. A Medallion architecture was implemented in Microsoft Fabric, which comprised three layers—Staging Layer, DWH Layer, and Presentation Layer—to streamline data ingestion, transformation, and reporting.

-

The Staging Layer utilized Lakehouse to handle raw data ingestion from various sources, including production databases and Dataverse. In the DWH Layer, data was transformed and stored in a structured format using PySpark and Spark SQL via notebooks for advanced data processing. Finally, the Presentation Layer housed reporting-ready data in the Warehouse and was integrated with Power BI for real-time data visualization and analytics.

Key elements of the migration included:

-

Pipeline Automation: Pipelines were created and automated using Microsoft Fabric Pipelines and scheduled to run at one-day intervals, ensuring a consistent and reliable data flow across all layers.

-

Data Transformation: PySpark and Spark SQL were employed to process and transform data within notebooks for the DWH Layer, ensuring high performance and flexibility in handling complex data transformations.

-

Integration with Power BI: The reporting team collaborated to connect the semantic model in the Presentation Layer to Power BI, enabling real-time insights through interactive dashboards and reports.

Technology Used:

-

Microsoft Fabric: For the data architecture and automation of ETL processes.

-

Lakehouse: Utilized for both Staging and DWH layers.

-

Warehouse: Used for the Presentation Layer to store reporting-ready data.

-

Microsoft Fabric Pipelines: To automate data flow and manage the ETL process.

-

PySpark & Spark SQL: For advanced data transformations in notebooks.

-

Power BI: For real-time reporting and data visualization.

Challenges Faced:

-

SQL to PySpark Conversion: One of the significant challenges was converting complex SQL stored procedures used in SSIS into PySpark code due to the limitations of SQL commands in the Lakehouse environment. This required refactoring the logic to efficiently execute it in PySpark notebooks.

-

Data Flow Coordination: Ensuring that the data flowed seamlessly through the multiple layers (Staging, DWH, and Presentation) with minimal latency while maintaining data integrity was a complex task.

-

Performance Optimization: With large datasets being processed daily, optimizing PySpark scripts and pipeline executions to reduce execution time and resource consumption was critical.

-

Data Quality Assurance: During the migration, ensuring that the transformed data retained its accuracy and consistency across layers posed challenges. Implementing validation checks and thorough testing at each stage of the migration helped mitigate data integrity issues.

-

Collaboration Across Teams: Coordinating between the migration team and the reporting team for integrating Power BI with the data warehouse added an extra layer of complexity, especially ensuring that the data model and report structure aligned with business needs.